I was rather happy to be invited to help marshmallow laser feast complete their latest VR project. I've got the VR bug now!

The first chapter of Treehugger: Wawona is centred on nature's cathedral, the giant Sequoia from the famous Sequoia National Park (California, USA). Wawona is the (local Native American) Miwok’s word for ‘hoot of an owl’, imitating the sound of the Northern Spotted Owl - believed to be the tree’s spiritual guardian.

Participants are invited to don a VR headset, place their heads into the tree’s knot and be transported into the Sequoia’s secret inner world. The longer you hug the tree, the deeper you drift into ‘treetime’: a hidden dimension that lies just beyond the limit of our senses. Audiences embark on a journey of abstract visualisation, following a single drop of water as it traverses from root to canopy in these enormous living structures.

Treehugger is currently showing at the Southbank Centre, London for opening times please click on the following link - southbankcentre.co.uk/whats-on/117900-treehugger-2016

Credits:

Concept by: Marshmallow Laser Feast

Direction: Barney Steel, Ersin Han Ersin, Robin McNicholas

Collaborating Artist: Natan Sinigaglia

Executive Producer: Eleanor (Nell) Whitley

Senior Producer: Mike Jones

Senior Producer (US): Armand Weeresinghe

Production Manager: Mark Geary

Production Support: Cordelia MacDonald

Binaural Sound Designer / Sonic Artist / Audio Capture: Mileece I’Anson

Spatialisation Audio System Designer: Antoine Bertin

3D Designer: Harvard Tveito

VVVV Developers: Chris Plant, Tebjan Halm

Junior Developer: Laine Kočãne

Photogrammetry & VFX Supervisor: Scott Metzger

LIDAR Scanning & Photogrammetry: Mimic

Root System Modelling: Ironklad

Installation Technologist: Hayden Anyasi

Tree Fabrication: Octant Objects / Other Fabrications / ML Fabcuts

With thanks to:

Natural History Museum

Salford University

PNY

3Dception

The Macaulay Library at the Cornell Lab of Ornithology, Ithaca, New York

Treehugger is commissioned by Cinekid Foundation, STRP, Southbank Centre and Migrations.

For Flatpack 10 this year, I showed Frequency Response #3, and also debuted Brian Duffy's new work Instead of Instead of Faint Spirit

For the past 5 years Brian Duffy (Modified Toy Orchestra) has been writing an analogue love letter to the memory of Trish Keenan entitled “Instead Of Faint Spirit”, using only a Roland System 100 monophonic analogue synth made in Japan in 1975. Brian describes it as “impossible to perform live” due to the hundreds of individual layers painstakingly overlaid on each track, so tonight he and Chris Plant will present a “live mix” of the album. Chris Plant will provide live visuals created using various vintage techniques, including a handmade analogue video synthesizer, circuit bent cathode ray televisions, laser lumia and other assorted oddities. Modern contraptions such as computers may be involved, but will be kept to a minimum, mostly as signal generators rather than image sources.

I used a mixture of Oscilloscope, both a standard scope with a camera to the screen, on which I produced vector graphics, but also some CRT televisions that I hacked into the coils to monitor the audio feeds Brian was playing.

I also created a video synth using VGA-VOLT a simple hack that wires into the RGB pins of a VGA cable, you send audio signals to create waveforms across the raster of the screen. It was a fascinating project that I'm going to continue exploring.

Another project with Claudia Paz Studio

Pixel Flow explores the senses of the visitors in a surrounding space generated by light and sound that allows them to express freely. The feeling of being wrapped in a spiral of light pixels and sounds is activated by the natural flow of the body.

CREDITS

Design & Concept:

Claudia Paz Lighting Studio

Project Management & Implementation

ARQUILEDS

Structure & System Design

César Castro

Art Direction

Claudia Paz

Interactive Programing

Chris Plant

Colour Burst

Sound Designer

Neil Spragg

Future Sound Design

Sonido

Giancarlo Aita Campodonico

Equipo Profesionales

Installer

MAS Contratistas Generales

I was asked to join the project as developer and animator as they wanted to include 3d scans, brainwaves and other biometric data captured from the choir. So I oversaw the 3d shoots, wrangled the massive amount of data and we all got animating! Also fun was that the music wasn't recorded until 36 hours before the show so we had quite a mad rush edit and render, as well as the usually 3d mapping fun of fixing the model of the building when it didnt match up!

I was asked to join the project as developer and animator as they wanted to include 3d scans, brainwaves and other biometric data captured from the choir. So I oversaw the 3d shoots, wrangled the massive amount of data and we all got animating! Also fun was that the music wasn't recorded until 36 hours before the show so we had quite a mad rush edit and render, as well as the usually 3d mapping fun of fixing the model of the building when it didnt match up!

http://59productions.co.uk/project/the_harmonium_project

Flatpack Festival asked me to become Artist-In-Residence in the Photonics Department of Aston University, to make a response to the work they do there. My piece would appear in the run up to Birmingham Library’s Light Fest, as part of an all-day event highlighting the research work of the Department which featured lectures and demonstrations.

Initially I planned to make a laser-based piece, however considering the health and safety implications of this (not to mention the hundreds of school children likely to be in attendance) I decided that using LED’s would be a much safer option instead.

All the demonstrations were based on the physics of light, colour mixing, refraction etc. which inspired me to create a more visceral piece : something to make you feel light, to perceive it and its effects on the brain.

To do this I made some 1.5m light probes from translucent tubes which featured 170 individually controllable pixels; 85 to the front and 85 to the back. This gave me the ability to control both the direct and the indirect light which bounced off the surface to the rear of the tubes, which meant I could separately colour the background and the detail.

My initial plan was to create an interactive piece but as I added audio it revealed a further layer which I decided to experiment with. I took the frequencies of red, green and blue light and dropped them down many octaves so that they became audible. I then used the activation of the red, green and blue pixels to control the volumes of these frequencies. I wondered, could it perhaps be possible to perceive colours through sound? I discovered that the combination of this audible droning of the frequencies combined with a slow colour change produced a very powerful emotional effect akin to someone switching on a “calmness switch” if you will. In this state one became very ‘present’ and indeed during the event many people commented that they felt like they had stepped into a different world.

I further refined the audio by adding some sub octaves to the frequencies, fine-tuning the effect and experimented interplay between the fore- and background illuminations until I was happy with the effect.

I feel like I have come full circle to the beginning of my interest in light in that I am again hand constructing light experiments. I now have the kind of technology I could have only dreamed about 20 years ago. I intended these probes to be multifunctional, and I intend to continue my investigation in other kinds of spaces through the exploration of minimal colour fields and more kinetic installations.

This has now been shown at Seeing Sound festival at Bath Spa university (#2) which was a fascinating weekend of audio visual performances and academic papers, and Flatpack10 (#3) where it was described as "Chris Plant’s installation ‘Frequency Response’ drew on correspondences between colour and music theory to create a soothing, subtle blend of light and sound." by Fluid Radio

Something I had no idea about was using fibre optics as sensors, which given my use of sensors in much of my work is something I'm going to be looking at, I had a really good chat with Michal Zubel, who as one strand of his research is working on some low budget implementations of sensor read back using webcams and DIY spectrometers (more info on spectrometers can be found at public lab )

The theory behind it is that they can etch grating patterns inside a piece of fibre, both glass and polymer, and when you shine light down the cable, the grating patterns will reflect specific colours of light depending on the spacing of the grating, the clever part is, that if the fibre is distorted the spacing of the grating changes and the colour of the light that is reflected changes as well. You can add different gratings in different positions down the length of the cable and so get multiple sensors in 1 fibre optic. Given that the core of a fibre is 8 µm in diameter, you need some serious kit to create the gratings, but I believe there are commercial suppliers.

I'm not sure if I will investigate this technique directly, as I have also started thinking about using fibres 'coupled' to a ccd of a camera and seeing if I can detect light intensity changes down the length of the fibre, this might make it possible to make a interactive 70's lamp out of a bundle of fibres for example, or something, more convoluted of course!

Another invitation from Strukt to work on a project in January led to a rather interesting project tracking, masking and projecting onto dancers and some mapped columns on the set.

Nespresso - "Pixie" Launch Event from Strukt Studio on Vimeo.

There is a making of on the Strukt page as well as some more photos

The project is also featured in Barco's Red Magazine issue 6

Marshmallow Laser Feast approached me to join the team for this augmented reality web advert, they wanted to do all the CG fx in the real world, in realtime, mapped to a free roaming camera. We used vvvv and vux's DX11 pack to provide 3 HD outputs from each of 2 PC's, rendering unto 8million LIDAR particles with lighting fx, and a variety of other CG fx.

MoCap + real world LiDAR data + CG + Vicon + light projection with help from Andy Serkis’ Imaginarium, Marshmallow Laser Feast visualise the Volkswagen's invisible data and intelligent sensors in the latest Passat. These ‘Invisible Made Visible’ sequences are made in real time as one continuous in-camera shot using vvvv.

For the studio shoot, infra-red sensors were placed on the camera and tracked by a Vicon rig. This allowed the cameraman to move freely about the set. Positional data from this rig was translated in real-time by MLFs proprietary system, which in turn projected the CG environment around the car. The end result is beautifully crafted moving images with perspective that perfectly matches the camera’s angle. Ultimately, the technology has been designed so CG scenes can be rendered in real-time, whilst interacting with a moving camera and simultaneously shifting according to the protagonist’s point of view.

In April 2012 myself and Elliot Woods were approached to create an application to project onto a car on a turntable, the brief expanded as the project went on, until by the end, it was to be a different car (of the same model) for every show, they wanted to be able to do multiple shows per day and the alignment was to be done by a member of the staff at the Landrover experience.

A challenging brief.

The building was finally opened in December 2012, the car takes approximately 10-15 minutes to lineup using 3 sliders and a rotatary control on an iPad, with a couple of buttons to spin the turntable around so you can check the setup.

It using 7 projection design projectors all running at 1920x1200, 3 for the back wall, 2 for the car and 2 for the floor, we also had to minimise any visible shadows, content was rendered at 4k and is all run off 1 pc.

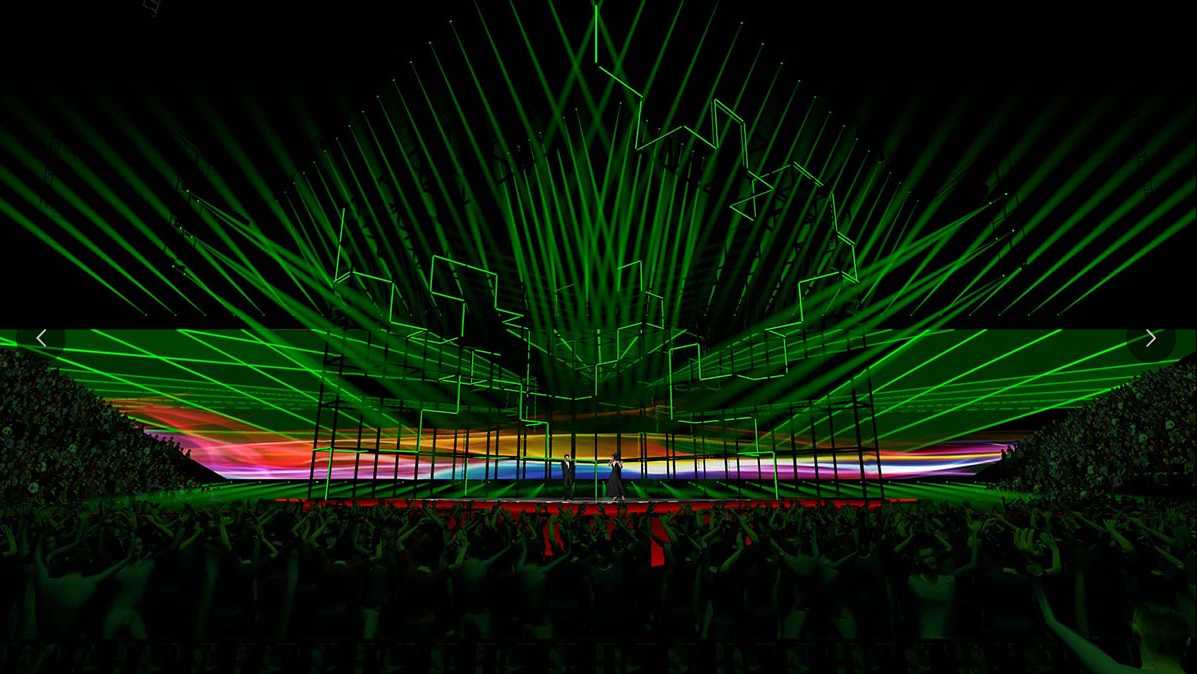

In 2014 Eurovision I was approached by Nicoline Refsing to produce renders and visualisations for her as she was the creative director for the video screens for Eurovision in Copenhagen. I was also to create some video pieces for the 3d LED cubus that was a feature of the set.

We decided we needed a realtime 3d visualiser (see video at bottom of the page) to best be able to critique the renders, after trying out what was on the market, I decided to create a visualiser myself so we could have extras such as reflections, lights and pyros.

for a comparison to d3/Disguise, the market leader at the time, here is one of their previz videos